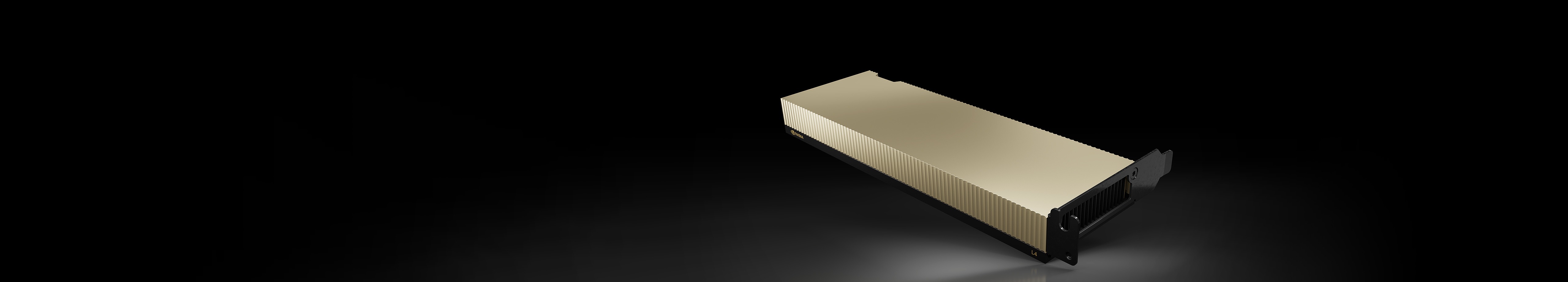

NVIDIA L4

Tensor Core GPU

The breakthrough universal accelerator for efficient video, AI, and graphics.

Data sheetThe NVIDIA L4 Tensor Core GPU powered by the NVIDIA Ada Lovelace architecture delivers universal, energy-efficient acceleration for video, AI, visual computing, graphics, virtualization, and more. Packaged in a low-profile form factor, L4 is a cost-effective, energy-efficient solution for high throughput and low latency in every server, from the edge to the data center to the cloud.

Measured performance: 8x L4 vs 2S Intel 8362 CPU server comparison, end-to-end video pipeline with CV-CUDA® decode, preprocessing, inference (SegFormer), postprocessing, encode, NVIDIA® TensorRT™ 8.6 vs CPU-only pipeline using OpenCV 4.7, PyTorch inference.

Transform video applications with the power of NVIDIA L4. Whether streaming live to millions of viewers, enabling users to build creative stories, or delivering immersive augmented and virtual reality (AR/VR) experiences, servers equipped with L4 allow hosting up to 1,040 concurrent AV1 video streams at 720p30 for mobile users.¹

With fourth-generation Tensor Cores and 1.5X larger GPU memory, NVIDIA L4 GPUs paired with the CV-CUDA® library take video content-understanding to a new level. L4 delivers 120X higher AI video performance than CPU-based solutions, letting enterprises gain real-time insights to personalize content, improve search relevance, detect objectionable content, and implement smart-space solutions.

¹ Measured performance: 8x L4 AV1 low-latency P1 preset encode at 720p30.

² Results from EPA calculator using 1.677MW savings.

8x L4 vs. 2S Intel 8362 CPU server TCO comparison: end-to-end video pipeline with CV-CUDA pre- and postprocessing, decode, inference (SegFormer), encode, TRT 8.6 vs. CPU-only pipeline using OpenCV 4.7, PyTorch inference.

Measured performance: L4 vs T4 image generation, 512×512 stable diffusion v2.1, FP16, TensorRT 8.5.2.

Generative AI for images and text makes customer lives more convenient and experiences more immersive across all industries. NVIDIA L4 supercharges compute-intensive generative AI inference by delivering up to 2.5X higher performance compared to the previous GPU generation. And with 50 percent more memory capacity, L4 enables larger image generation, up to 1024×768, which wasn’t possible on the previous GPU generation.

Measured performance: Real-time rendering: NVIDIA Omniverse™ performance for real-time rendering at 1080p and 4K with NVIDIA Deep Learning Super Sampling (DLSS) 3.

Ray-Tracing: Gaming performance geomean for AAA titles supporting ray tracing and DLSS 3.

With third-generation RT Cores and AI-powered NVIDIA Deep Learning Super Sampling 3 (DLSS 3), NVIDIA L4 delivers over 4X higher performance for AI-based avatars, NVIDIA Omniverse™ virtual worlds, cloud gaming, and virtual workstations. These capabilities enable creators to build real-time, cinematic-quality graphics and scenes for immersive visual experiences not possible with CPUs.

NVIDIA L4 is an integral part of the NVIDIA data center platform. Built for video, AI, NVIDIA RTX™ virtual workstation (vWS), graphics, simulation, data science, and data analytics, the platform accelerates over 3,000 applications and is available everywhere at scale, from data center to edge to cloud, delivering both dramatic performance gains and energy-efficiency opportunities.

Optimized for mainstream deployments, L4 delivers a low-profile form factor operating in a 72W low-power envelope, making it an efficient, cost-effective solution for any server or cloud instance from NVIDIA’s partner ecosystem.

How to Buy NVIDIA vWS

Optimized to streamline AI development and deployment, the NVIDIA AI Enterprise software suite includes AI solution workflows, frameworks, pretrained models, and infrastructure optimization that are certified to run on common data center platforms and mainstream NVIDIA-Certified Systems™ with NVIDIA L4 GPUs.

NVIDIA AI Enterprise is a license addition for NVIDIA L4 GPUs, making AI accessible to nearly every organization with the highest performance in training, inference, and data science. NVIDIA AI Enterprise, together with NVIDIA L4, simplifies the building of an AI-ready platform, accelerates AI development and deployment, and delivers performance, security, and scalability to gather insights faster and achieve business value sooner.

Get Started With NVIDIA AI Enterprise on NVIDIA LaunchPad Learn More About NVIDIA LaunchPad

The NVIDIA L4 Tensor Core graphics processor offers exceptional capabilities in video analysis and transcoding. Built on the Ada Lovelace architecture, it provides a significant performance improvement over its predecessor, the T4. The L4 can handle over 1,000 simultaneous AV1 video streams at 720p30 resolution for mobile applications, making it an ideal solution for streaming services and content delivery networks. It delivers up to 120 times higher AI video performance compared to CPU-based solutions, enabling real-time insights for personalized content, improved search relevance, and intelligent spatial solutions. The advanced video processing engines of the L4 support AV1 encoding and decoding, while its AI capabilities, powered by fourth-generation Tensor Cores, enhance tasks related to video analytics, such as object detection and content understanding. Additionally, the energy efficiency and single-slot, low-profile design of the L4 make it suitable for deployment in various environments, from data centers to edge locations. This combination of high performance, versatility, and efficiency positions the NVIDIA L4 as a powerful solution for organizations looking to optimize their video processing and analytics infrastructure.

The NVIDIA L4 Tensor Core graphics processor is an efficient and versatile solution for AI inference workloads, offering significant performance improvements over its predecessor, the T4. Built on the NVIDIA Ada Lovelace architecture, the L4 features fourth-generation Tensor Cores and third-generation RT Cores, making it ideal for a wide range of AI applications. With 24 GB of GDDR6 memory and an energy-efficient 72 W form factor, the L4 delivers up to 2.7 times greater AI generative performance than the previous generation. It excels in AI inference tasks across various domains, including computer vision, natural language processing, and recommendation systems. The hardware-accelerated image and video processing engines, including AV1 encoding/decoding capabilities, make the L4 particularly effective for AI-driven video analytics and transcoding. Its single-slot, low-profile design facilitates easy integration with mainstream servers, making it an ideal choice for organizations looking to deploy AI inference at scale in data centers or edge computing environments. The versatility and performance of the L4 position it as a universal AI inference accelerator, capable of handling diverse workloads, from video streaming to drug discovery.

The NVIDIA A40 graphics processor is a powerful solution for advanced rendering applications, leveraging the cutting-edge Ampere architecture to deliver exceptional performance and efficiency. Designed specifically for demanding rendering workloads in industries such as media and entertainment, architecture, and automotive design, the A40 features a robust array of CUDA Cores and Tensor Cores. This configuration enables the handling of complex 3D rendering tasks, real-time ray tracing, and AI-assisted graphics with remarkable speed and precision.

The A40 supports NVIDIA’s RTX technology, allowing for photorealistic rendering and real-time simulation of lighting, shadows, and reflections, streamlining creative workflows and reducing time-to-market for digital content creators and designers. Its high memory bandwidth ensures smooth handling of large datasets and intricate visual details, while compatibility with professional NVIDIA software tools, such as RTX Renderer and Omniverse, simplifies integration with existing pipelines.

Overall, the NVIDIA A40 graphics processor redefines high-end rendering capabilities, offering unmatched performance and fidelity, empowering professionals to create stunning visual experiences and push the boundaries of digital content creation.

The NVIDIA L4 Tensor Core graphics processor marks a significant advancement in graphics and visualization workloads, delivering over 4 times the performance compared to its predecessor, the T4. Built on the Ada Lovelace architecture, the L4 is equipped with third-generation RT Cores and AI-based DLSS 3 technology, allowing it to handle demanding tasks such as AI-driven avatars, NVIDIA Omniverse virtual worlds, cloud gaming, and virtual workstations. These capabilities enable creators to render cinematic-quality graphics and highly detailed scenes in real-time, providing immersive visual experiences that were previously unattainable with earlier processors.

The versatility of the L4 extends to professional visualization applications, including Computer-Aided Design (CAD) and Computer-Aided Engineering (CAE), making it an excellent choice for designers and engineers. With its energy-efficient 72 W power envelope and low-profile single-slot design, the L4 can be easily integrated into mainstream servers, enabling organizations to deploy powerful graphics and visualization capabilities in data centers, edge locations, and cloud environments.

The NVIDIA L4 Tensor Core graphics processor offers significant advancements in generative AI workloads, delivering up to 2.7 times the performance compared to its predecessor, the NVIDIA T4. Built on the Ada Lovelace architecture, the L4 features fourth-generation Tensor Cores and 24 GB of GDDR6 memory, enabling it to handle larger and more complex generative AI models. This increased memory capacity allows for image generation up to a resolution of 1024×768, which was not possible with the T4 graphics processor.

The versatility of the L4 makes it well-suited for a wide range of generative AI applications, including text-to-image generation, AI-powered avatars, and natural language processing tasks. Its energy-efficient design, operating within a power range of 72 W, makes it an attractive option for large-scale deployments in data centers and edge computing environments. The combination of performance, efficiency, and versatility of the L4 positions it as a powerful solution for organizations looking to accelerate their generative AI workflows while maintaining cost-effectiveness and sustainability.

| Form Factor | L4 |

| FP32: | 30.3 teraFLOPS |

| TF32 Tensor Core: | 120 teraFLOPS* |

| FP16 Tensor Core: | 242 teraFLOPS* |

| BFLOAT16 Tensor Core: | 242 teraFLOPS* |

| FP8 Tensor Core: | 485 teraFLOPS* |

| INT8 Tensor Core: | 485 TOPs* |

| GPU memory: | 24 GB |

| GPU memory bandwidth: | 300 GB/s |

| NVENC | NVDEC | JPEG decoders: | 2 | 4 | 4 |

| Max thermal design power (TDP): | 72 W |

| Form factor: | 1-slot low-profile, PCIe |

| Interconnect: | PCIe Gen4 x16 64GB/s |

| Server options: | Partner and NVIDIA-Certified Systems with 1–8 GPUs |

* Shown with sparsity. Specifications are one-half lower without sparsity.