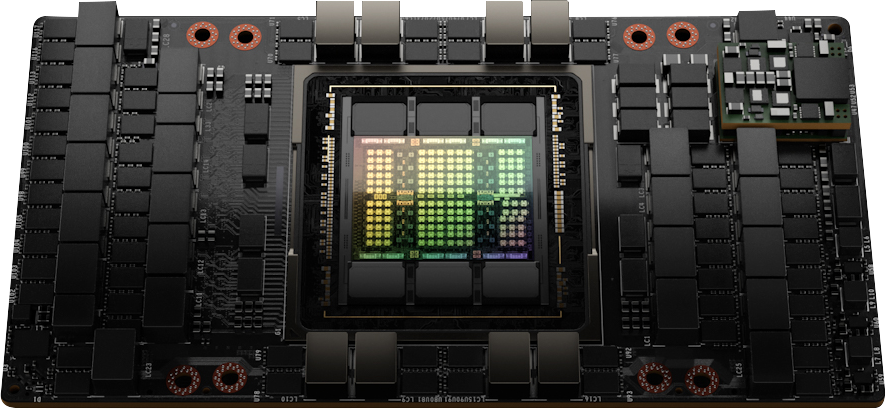

NVIDIA H100

Tensor Core GPU

Wyjątkowa wydajność, skalowalność i bezpieczeństwo dla każdego centrum danych.

Wykorzystaj wyjątkową wydajność, skalowalność i bezpieczeństwo przy każdym obciążeniu dzięki procesorowi graficznemu NVIDIA H100 Tensor Core. Dzięki systemowi przełączników NVIDIA NVLink™ można podłączyć do 256 procesorów graficznych H100 w celu przyspieszenia obciążeń eksaskalowych. Procesor graficzny zawiera również dedykowany silnik transformatorowy do rozwiązywania modeli językowych o bilionach parametrów. Połączone innowacje technologiczne zastosowane w H100 mogą przyspieszyć duże modele językowe (LLM) aż niewiarygodnie 30-krotnie w porównaniu z poprzednią generacją, zapewniając wiodącą w branży konwersacyjną sztuczną inteligencję.

Mixture of Experts (395 Billion Parameters)

Projected performance subject to change. Training Mixture of Experts (MoE) Transformer Switch-XXL variant with 395B parameters on 1T token dataset | A100 cluster: HDR IB network | H100 cluster: NVLINK Switch System, NDR IB

NVIDIA H100 GPUs feature fourth-generation Tensor Cores and the Transformer Engine with FP8 precision that provides up to 9X faster training over the prior generation for mixture-of-experts (MoE) models. The combination of fourth-generation NVlink, which offers 900 gigabytes per second (GB/s) of GPU-to-GPU interconnect; NVLINK Switch System, which accelerates communication by every GPU across nodes; PCIe Gen5; and NVIDIA Magnum IO™ software delivers efficient scalability from small enterprises to massive, unified GPU clusters.

Deploying H100 GPUs at data center scale delivers outstanding performance and brings the next generation of exascale high-performance computing (HPC) and trillion-parameter AI within the reach of all researchers.

AI solves a wide array of business challenges, using an equally wide array of neural networks. A great AI inference accelerator has to not only deliver the highest performance but also the versatility to accelerate these networks.

H100 further extends NVIDIA’s market-leading inference leadership with several advancements that accelerate inference by up to 30X and deliver the lowest latency. Fourth-generation Tensor Cores speed up all precisions, including FP64, TF32, FP32, FP16, and INT8, and the Transformer Engine utilizes FP8 and FP16 together to reduce memory usage and increase performance while still maintaining accuracy for large language models.

Megatron Chatbot Inference (530 Billion Parameters)

Projected performance subject to change. Inference on Megatron 530B parameter model based chatbot for input sequence length=128, output sequence length =20 | A100 cluster: HDR IB network | H100 cluster: NVLink Switch System, NDR IB

Projected performance subject to change. 3D FFT (4K^3) throughput | A100 cluster: HDR IB network | H100 cluster: NVLink Switch System, NDR IB | Genome Sequencing (Smith-Waterman) | 1 A100 | 1 H100

The NVIDIA data center platform consistently delivers performance gains beyond Moore’s Law. And H100’s new breakthrough AI capabilities further amplify the power of HPC+AI to accelerate time to discovery for scientists and researchers working on solving the world’s most important challenges.

H100 triples the floating-point operations per second (FLOPS) of double-precision Tensor Cores, delivering 60 teraFLOPS of FP64 computing for HPC. AI-fused HPC applications can also leverage H100’s TF32 precision to achieve one petaFLOP of throughput for single-precision matrix-multiply operations, with zero code changes.

H100 also features new DPX instructions that deliver 7X higher performance over A100 and 40X speedups over CPUs on dynamic programming algorithms such as Smith-Waterman for DNA sequence alignment and protein alignment for protein structure prediction.

DPX instructions comparison HGX H100 4-GPU vs dual socket 32 core IceLake

Data analytics often consumes the majority of time in AI application development. Since large datasets are scattered across multiple servers, scale-out solutions with commodity CPU-only servers get bogged down by lack of scalable computing performance.

Accelerated servers with H100 deliver the compute power—along with 3 terabytes per second (TB/s) of memory bandwidth per GPU and scalability with NVLink and NVSwitch—to tackle data analytics with high performance and scale to support massive datasets. Combined with NVIDIA Quantum-2 Infiniband, the Magnum IO software, GPU-accelerated Spark 3.0, and NVIDIA RAPIDS™, the NVIDIA data center platform is uniquely able to accelerate these huge workloads with unparalleled levels of performance and efficiency.

IT managers seek to maximize utilization (both peak and average) of compute resources in the data center. They often employ dynamic reconfiguration of compute to right-size resources for the workloads in use.

Second-generation Multi-Instance GPU (MIG) in H100 maximizes the utilization of each GPU by securely partitioning it into as many as seven separate instances. With confidential computing support, H100 allows secure end-to-end, multi-tenant usage, ideal for cloud service provider (CSP) environments.

H100 with MIG lets infrastructure managers standardize their GPU-accelerated infrastructure while having the flexibility to provision GPU resources with greater granularity to securely provide developers the right amount of accelerated compute and optimize usage of all their GPU resources.

Today’s confidential computing solutions are CPU-based, which is too limited for compute-intensive workloads like AI and HPC. NVIDIA Confidential Computing is a built-in security feature of the NVIDIA Hopper™ architecture that makes H100 the world’s first accelerator with confidential computing capabilities. Users can protect the confidentiality and integrity of their data and applications in use while accessing the unsurpassed acceleration of H100 GPUs. It creates a hardware-based trusted execution environment (TEE) that secures and isolates the entire workload running on a single H100 GPU, multiple H100 GPUs within a node, or individual MIG instances. GPU-accelerated applications can run unchanged within the TEE and don’t have to be partitioned. Users can combine the power of NVIDIA software for AI and HPC with the security of a hardware root of trust offered by NVIDIA Confidential Computing.

The Hopper Tensor Core GPU will power the NVIDIA Grace Hopper CPU+GPU architecture, purpose-built for terabyte-scale accelerated computing and providing 10X higher performance on large-model AI and HPC. The NVIDIA Grace CPU leverages the flexibility of the Arm® architecture to create a CPU and server architecture designed from the ground up for accelerated computing. The Hopper GPU is paired with the Grace CPU using NVIDIA’s ultra-fast chip-to-chip interconnect, delivering 900GB/s of bandwidth, 7X faster than PCIe Gen5. This innovative design will deliver up to 30X higher aggregate system memory bandwidth to the GPU compared to today’s fastest servers and up to 10X higher performance for applications running terabytes of data.

|

Form Factor |

H100 SXM |

H100 PCIe |

| FP64 | 34 teraFLOPS | 26 teraFLOPS |

| FP64 Tensor Core | 67 teraFLOPS | 51 teraFLOPS |

| FP32 | 67 teraFLOPS | 51 teraFLOPS |

| TF32 Tensor Core | 989 teraFLOPS* | 756teraFLOPS* |

| BFLOAT16 Tensor Core | 1,979 teraFLOPS* | 1,513 teraFLOPS* |

| FP16 Tensor Core | 1,979 teraFLOPS* | 1,513 teraFLOPS* |

| FP8 Tensor Core | 3,958 teraFLOPS* | 3,026 teraFLOPS* |

| INT8 Tensor Core | 3,958 TOPS* | 3,026 TOPS* |

| GPU memory | 80GB | 80GB |

| GPU memory bandwidth | 3.35TB/s | 2TB/s |

| Decoders | 7 NVDEC 7 JPEG |

7 NVDEC 7 JPEG |

| Max thermal design power (TDP) | Up to 700W (configurable) | 300-350W (configurable) |

| Multi-Instance GPUs | Up to 7 MIGS @ 10GB each | |

| Form factor | SXM | PCIe Dual-slot air-cooled |

| Interconnect | NVLink: 900GB/s PCIe Gen5: 128GB/s | NVLINK: 600GB/s PCIe Gen5: 128GB/s |

| Server options | NVIDIA HGX™ H100 Partner and NVIDIA-Certified Systems™ with 4 or 8 GPUs NVIDIA DGX™ H100 with 8 GPUs | Partner and NVIDIA-Certified Systems with 1–8 GPUs |

| NVIDIA AI Enterprise | Add-on | Included |